So far we have discussed several standard methods of influencing DB2’s access path selection -- in the first 4 parts of this series (1, 2, 3, 4) -- as well as updating DB2 Catalog statistics in part 5. But there is another tool in your DBA arsenal that you can use to force/influence access path selection - optimization hints, which will be the focus of today's post.

Using Optimization Hints to Force an Access Path

Optimization hints can be coded to influence the DB2 Optimizer's choice of access path. Actually, though,

this method does not “influence” the access path; instead it directs DB2 to use

a specific access path instead of determining a new access path using

statistics.

The same basic cautions that apply to modifying DB2 Catalog

statistics also apply to optimization hints. Only experienced analysts and DBAs

should attempt to use optimization hints. However, optimization hints are much

easier to apply than updating DB2 Catalog statistics.

There are several methods of implementing optimization hints. First, you can code optimization hints using the PLAN_TABLE.

However, before you can use optimization hints, the DB2 DSNZPARM parameter for

optimization hints (OPTHINTS) must be set to YES.

If it is set to NO, you cannot use optimization hints.

There are two ways to specify an optimization hint to the PLAN_TABLE:

- Modify PLAN_TABLE data to use an access path that was

previously created by the DB2 optimizer, or;

- INSERT

rows to the PLAN_TABLE to create a new access path

independently.

In general, favor the first method over the second . It is

a difficult task to create from scratch an accurate access path in the PLAN_TABLE.

If you do not get every nuance of the access path correct, it is possible that

DB2 will ignore the optimization hint and calculate an access path at bind

time. However, if you use an access path that was originally created by DB2,

you can be reasonably sure that the access path will be valid. Of course, sometimes an access path created for an older version of DB2 will

not be valid in a newer version of DB2.

You should consider using optimization hints for the same reasons

you would choose to modify DB2 Catalog statistics or tweak SQL. The general

reason is to bypass the access path chosen by DB2 and use a different, hopefully

more efficient, access path.

In addition to this reason, optimization hints are very useful as

you migrate from release to release of DB2. Sometimes, a new release or version

of DB2 can cause different access paths to be chosen for queries that were running

fine. Or perhaps new statistics were accumulated between binds causing access

paths to change. By saving old access paths in a PLAN_TABLE,

you can use optimization hints to direct DB2 to use the old access paths

instead of the new, and perhaps undesirable, access paths due to the new

release or statistics.Of course, ever since DB2 9 for z/OS, you can use the plan management feature

to save old access paths across rebinds. This is a more effective approach than

saving them yourself.

Always test and analyze the results of any query that uses

optimization hints to be sure that the desired performance is being achieved.

Defining an Optimization Hint

To specify that an optimization hint is to be used, you

will have to ensure that the PLAN_TABLE has the appropriate columns in it:

OPTHINT VARCHAR(128) NOT NULL WITH DEFAULT

HINT_USED VARCHAR(128) NOT NULL WITH DEFAULT

PRIMARY_ACCESSTYPE CHAR(1) NOT NULL WITH DEFAULT

To set an optimization hint, you need to first identify (or create) the PLAN_TABLE rows that refer to the desired access path. You will then need to update those rows in the PLAN_TABLE, specifying an identifier for the hint in the OPTHINT column. For example,

UPDATE PLAN_TABLE

SET OPTHINT = ‘SQLHINT’

WHERE PLANNO = 50

AND APPLNAME = ‘PLANNAME’;

Of course, this is just an example. You may need to use other predicates to specifically identify the PLAN_TABLE rows to include in the optimization hint. Some columns that might be useful, depending on your usage of dynamic SQL and packages, include QUERYNO, PROGNAME, VERSION, and COLLID.

Keep in mind, though, that when you change a program that uses static SQL statements, the

statement number might change, causing rows in the PLAN_TABLE to be out

of sync with the modified application. For this reason, you should probably choose the newer method of specifying optimization hints, which I will discuss in a moment.

You can use the QUERYNO clause in SQL

statements to ease correlation of SQL statements in your program with your

optimization hints. Statements that use the QUERYNO

clause are not dependent on the statement number. To use QUERYNO,

you will need to modify the SQL in your application to specify a QUERYNO,

as shown in the following:

SELECT MGRNO

FROM DSN81010.DEPT

WHERE DEPTNO = ‘A00’

QUERYNO 200;

You can then UPDATE the PLAN_TABLE more easily using QUERYNO and be sure that the optimization hint will take effect, as shown in the following:

UPDATE PLAN_TABLE

SET OPTHINT = ‘SQLHINT’

WHERE QUERYNO = 200

AND APPLNAME = ‘PLANNAME’;

When the PLAN_TABLE is correctly updated (as well as possibly the application), you must REBIND the plan or package to determine if the hint is being used by DB2. When rebinding you must specify the OPTHINT parameter:

REBIND PLAN PLANNAME . . . OPTHINT(SQLHINT)

Be aware that the optimization hints may not actually be used by

DB2. For optimization hints to be used, the hint must be correctly specified,

the REBIND must

be accurately performed, and the environment must not have changed. For

example, DB2 will not use an access path specified using an optimization hint

if it relies on an index that has since been dropped.

Use EXPLAIN(YES) to verify whether the hint was

actually used. If the hint was used, the HINT_USED

column for the new access path will contain the name of the optimization hint

(such as SQLHINT in the previous example).

Optimization Hints and DB2 10 for z/OS

As of DB2 Version 10, applying optimization hints is much easier

than it was in prior versions of DB2. You can build the access path repository

using the BIND QUERY command. The access path repository

contains system-level access path hints and optimization options.

These statement-level optimization hints, also known as

instance-based statement hints, are enforced across the entire DB2 subsystem

based upon the actual text of the SQL statement.

The access path repository contains information about queries

including the text of the text, access paths, and optimization options. Using

the access path repository, you can save multiple copies of access paths and

switch back and forth between different copies of access paths for the same

query. The access path repository resides in the DB2 Catalog and is composed of

the following tables:

- The primary table

in the access path repository is SYSIBM.SYSQUERY. It

contains one row for each static or dynamic SQL query.

- SYSIBM.SYSQUERYPLAN

holds access path details for queries in SYSQUERY.

A query can have more than one access path for it.

- SYSIBM.SYSQUERYOPTS

stores miscellaneous information about each query.

The access path repository tables are populated when you issue

the BIND

QUERY command. Before running BIND QUERY,

though, you must first populate the DSN_USERQUERY_TABLE

with the query text you want to bind. Table 1 below depicts the columns of the DSN_USERQUERY_TABLE.

Table 1. DSN_USERQUERY_TABLE Columns

Column

|

Description

|

QUERYNO

|

An integer value

that uniquely identifies the query. It can be used to correlate data in this

table with the PLAN_TABLE.

|

SCHEMA

|

The default schema name to be used for unqualified database

objects used in the query (or blank).

|

HINT_SCOPE

|

The scope of the access plan hint:

System-level access plan hint

Package-level access plan hint

|

QUERY_TEXT

|

The actual text of the SQL statement.

|

USERFILTER

|

A filter name that can be used to group a set of queries together

(or blank).

|

OTHER_OPTIONS

|

IBM use only (or blank).

|

COLLECTION

|

The collection name of the package (option for package-level

access plan hints).

|

PACKAGE

|

The name of the package (option for package-level access plan

hints).

|

VERSION

|

The version of the package (option for package-level access plan

hints); if '*' is specified, DB2 uses only COLLECTION

and PACKAGE

values to look up rows in the SYSIBM.SYSPACKAGE and SYSIBM.SYSQUERY catalog tables.

|

REOPT

|

The value of the REOPT BIND parameter:

A: REOPT(AUTO)

1: REOPT(ONCE)

N: REOPT(NONE)

Y: REOPT(ALWAYS)

blank:

Not specified

|

STARJOIN

|

Contains an indicator specifying whether star join processing was

enabled for the query:

Y: Yes, star join enabled

N: No, star join disabled

blank:

Not specified.

|

MAX_PAR__DEGREE

|

The maximum degree of parallelism (or -1 if not specified).

|

DEF_PAR_DEGREE

|

Indicates whether parallelism was enabled:

ONE: Parallelism disabled

ANY: Parallelism enabled

Blank:

Not specified

|

SJTABLES

|

Contains the minimum number of tables to qualify for star join

(or [nd]1 if not specified).

|

QUERYID

|

Identifies access plan hint information in the SYSIBM.SYSQUERY and SYSIBM.SYSQUERYPLAN

tables.

|

OTHER_PARMS

|

IBM use only (or blank).

|

You can populate the DSN_USERQUERY_TABLE using

simple INSERT

statements. Consider using a subselect from the DB2 Catalog to assist, such as

in the following example:

INSERT INTO DSN_USERQUERY_TABLE

(QUERYNO, SCHEMA, HINT_SCOPE, QUERY_TEXT, USERFILTER,

OTHER_OPTIONS, COLLECTION, PACKAGE, VERSION, REOPT,

STARJOIN, MAX_PAR_DEGREE, DEF_CURR_DEGREE, SJTABLES,

OTHER_PARMS)

SELECT SPS.STMTNO, 'SCHEMANAME', 1, SPS.STATEMENT, '',

'', SPS.COLLID, SPS.NAME, SPS.VERSION, '',

'', -1, '', -1, ''

FROM SYSIBM.SYSPACKSTMT SPS

WHERE SPS.COLLID = 'COLLID1'

AND SPS.NAME = 'PKG1'

AND SPS.VERSION = 'VERSION1'

AND SPS.STMTNO = 177;

This particular INSERT statement retrieves data from SYSIBM.SYSPACKSTMT for statement number 177 of VERSION1 of the PKG1 package in collection COLLID1.

You still must populate the PLAN_TABLE with hints. Then you need to run the BIND QUERY command to build the data in the access path repository. Running BIND QUERY LOOKUP(NO) reads the statement text, default schema, and bind options from DSN_USERQUERY_TABLE, as well as the system-level access path hint details from correlated PLAN_TABLE rows, and inserts the data into the access path repository tables.

After you have populated the access path repository, it is a good idea to delete the statement from the DSN_USERQUERY_TABLE. Doing so ensures that hints are not replaced when you issue subsequent BIND QUERY commands.

When a statement-level hint is established, DB2 tries to enforce it for that statement. Hints for static SQL statements are validated and applied when you REBIND the package that contains the statements. Hints for dynamic SQL statements are validated and enforced when the statements are prepared.

To remove hints from the access path repository, use the FREE QUERY command.

Runtime Options Hints

You can also use BIND QUERY to set

run-time options for a given SQL statement text. This allows you to control how

a particular statement should behave without trying to force its actual access

path.

To specify a run-time options hint, INSERT a row into

DSN_USERQUERY_TABLE indicating the QUERYNO as you would for an optimization

hint. Before running BIND QUERY, however, make sure that the PLAN_TABLE does

not contain a corresponding access path for the query.

If the PLAN_TABLE

contains an access path when you run BIND QUERY LOOKUP(NO), the SYSIBM.SYSQUERYOPTS

table won't be populated. If you err and issue the BIND QUERY when

data exists in the PLAN_TABLE,

simply DELETE

the pertinent PLAN_TABLE

data and rerun the BIND

QUERY command.

The REOPT, STARJOIN, MAX_PAR_DEGREE,

DEF_CURR_DEGREE,

SJTABLES,

and GROUP_MEMBER

columns can be used to set the run-time options using DSN_USERQUERY_TABLE.

Summary

Optimization hints provide a strong option for particularly vexing DB2 tuning problems. Consider investigating their usage for troublesome queries that once ran efficiently (and you still have the PLAN_TABLE data) but for whatever reasons, are no longer being optimized effectively.

On Wednesday, the focus of the general session was on IBM’s

acquisition of The Weather Company’s technology. The deal calls for IBM to acquire The Weather

Company’s B2B, mobile and cloud-based web properties, including WSI, weather.com,

Weather Underground and The Weather Company brand. IBM will not be acquiring The

Weather Channel television station, which will license weather forecast data

and analytics from IBM under a long-term contract. IBM intends to utilize its newly

acquired weather data in its Watson platform.

On Wednesday, the focus of the general session was on IBM’s

acquisition of The Weather Company’s technology. The deal calls for IBM to acquire The Weather

Company’s B2B, mobile and cloud-based web properties, including WSI, weather.com,

Weather Underground and The Weather Company brand. IBM will not be acquiring The

Weather Channel television station, which will license weather forecast data

and analytics from IBM under a long-term contract. IBM intends to utilize its newly

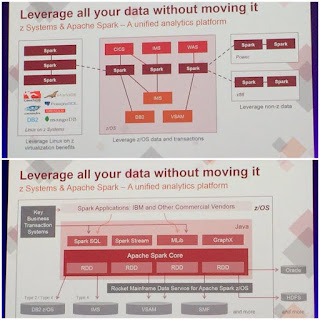

acquired weather data in its Watson platform. Apache Spark was another

pervasive topic this year. It was talked about in multiple sessions and I even

had the opportunity to play with it in a hands-on lab. The big news for z folks

is that IBM is bringing out a version of Spark for the mainframe that will run

on z/OS – it is already supported on zLinux.

Apache Spark was another

pervasive topic this year. It was talked about in multiple sessions and I even

had the opportunity to play with it in a hands-on lab. The big news for z folks

is that IBM is bringing out a version of Spark for the mainframe that will run

on z/OS – it is already supported on zLinux. As usual, and well-you-know if you've ever attended this conference before, there was also a LOT of walking to be done. From the hotel to the conference center to the expo hall to lunch to the conference center. But at least there were some signs making light of the situation this year!

As usual, and well-you-know if you've ever attended this conference before, there was also a LOT of walking to be done. From the hotel to the conference center to the expo hall to lunch to the conference center. But at least there were some signs making light of the situation this year!