Every year this week those of us in the USA take time out to give thanks for all that we have. We do this by taking time off of work, gathering with our families, eating turkey (and a lot of other stuff), and watching football.

It is one of my favorite holidays as it offers most of the joys of Christmas without many of the trappings.

So with this in mind, I'd like to wish all of my readers -- whether you reside here in the USA or anywhere in the world -- a very Happy Thanksgiving. Take some time to reflect on your good fortune... consider what you might be able to do to help others achieve success... and relax a bit and enjoy yourself...

We can talk about DB2 and databases again in December!

Wednesday, November 25, 2015

Wednesday, November 18, 2015

Midwest DB2 User Group (Dec 4, 2015)

Just a short blog post today to promote my upcoming speaking engagement at the Midwest DB2 User Group, in Chicago. If you are in or around the Chicagoland area on December 4th, 2015 I invite you to stop by and participate in the meeting!

The meeting starts at Noon and a free lunch is provided. I will be giving one of the 3 presentations that day. My presentation is titled Database and DB2 Trends circa 2015 - An overview of an industry in transition.... This is an ever-changing presentation that I have delivered on several occasions in the past, but not in exactly the same way. This pitch provides an overview of the transformation of data management over the course of the past few years. I discuss Big Data, analytics, NoSQL, and their impact on the modern data architecture and DB2 for z/OS in particular.

But that is not the only highlight of this event. Sheryl M. Larsen, now with BMC Software, will regale the group with the results of BMC's Annual Mainframe Research Survey. BMC started their mainframe survey ten years ago as a way to gain insight into the issues and challenges facing mainframe customers. And it always contains a lot of useful information and details for those of us in the business of mainframe computing.

The third speaker is Tim Lenahan, who I've been told will be presenting something a little bit different this time around. And having heard Tim speak in the past, I'm looking forward to what he has to say now!

So if you are going to be near Chicago in early December, register and attend the MWDUG meeting. I'm sure it will be worth your time!

Hope to see you there!

The meeting starts at Noon and a free lunch is provided. I will be giving one of the 3 presentations that day. My presentation is titled Database and DB2 Trends circa 2015 - An overview of an industry in transition.... This is an ever-changing presentation that I have delivered on several occasions in the past, but not in exactly the same way. This pitch provides an overview of the transformation of data management over the course of the past few years. I discuss Big Data, analytics, NoSQL, and their impact on the modern data architecture and DB2 for z/OS in particular.

But that is not the only highlight of this event. Sheryl M. Larsen, now with BMC Software, will regale the group with the results of BMC's Annual Mainframe Research Survey. BMC started their mainframe survey ten years ago as a way to gain insight into the issues and challenges facing mainframe customers. And it always contains a lot of useful information and details for those of us in the business of mainframe computing.

The third speaker is Tim Lenahan, who I've been told will be presenting something a little bit different this time around. And having heard Tim speak in the past, I'm looking forward to what he has to say now!

So if you are going to be near Chicago in early December, register and attend the MWDUG meeting. I'm sure it will be worth your time!

Hope to see you there!

Friday, November 13, 2015

A Quick and Dirty Guide to DB2 Performance

All performance problems are caused by change. Now as regular readers know, normally I avoid all-encompassing terms like all, always, none and never, but in this case, all is the appropriate term. If your DB2 environment is humming along and things are performing well, then the only thing that will disrupt that is a change of some form.

Change can take

many forms, not just a program change. Consider the following ways in which change can be introduced to your DB2 world:

- Physical changes to the environment, such as a new CPU, additional memory, new disk, or different storage technology (e.g. SSD).

- Changes to system software, such as a new release of a product (for example, QMF, CICS, or GDDM), the alteration of a product (for example, the addition of more or fewer CICS regions or an IMS SYSGEN), or a new product (for example, implementation of DFHSM).

- And let's not forget the installation of a new release or version of DB2, which can result in changes in access paths and the utilization of features new to DB2.

- An updated or new version of the operating system can also cause DB2 performance problems.

- Changes to the DB2 engine from maintenance releases, which can change the DB2 optimizer and other facets of DB2's operation.

- Changes in system capacity. More or fewer jobs could be executing concurrently when the performance problem occurs.

- Environment changes, such as the implementation of dynamic SQL, web-based programs or the adoption of Data Sharing.

- Database changes. This involves changes to any DB2 object, and ranges from adding a new column or an index, to changing table space types, to dropping and re-creating an object.

- Changes to the application development methodology, such as usage of check constraints instead of application logic or the use of stored procedures.

- Changes to application code.

So regardless of how it may seem at times, performance problems are not caused by magic. Something tangible

changes, creating a performance problem in the application, database, or subsystem. The challenge of tuning is

to find the source of the change -- the root cause if you will, gauge its impact, and formulate a solution.

Although the majority of your problems will be

application-oriented, you will frequently need to explore the tuning opportunities outside of SQL and programming changes when

application tuning has little effect.

The following is a quick reference of the possible tuning options

for each environment:

To tune z/OS

- Change WLM parameters to enhance throughput for DB2, its allied agent, and/or programs.

- Modify swappability.

- Add memory.

- Upgrade CPU.

- Add zIIPs and tune SQL/program to take advantage of zIIP processors.

- Implement data sharing.

- Use an active performance monitor (enables tuning on the fly).

To tune the

teleprocessing environments

- Change the system generation parameters.

- Tune the program definition (PSBs and PPT entries).

- Modify the Attachment Facility parameters.

- Add or modify resource parameters (e.g. RDO)

- Use an active performance monitor (enables tuning on the fly).

To tune the DB2

subsystem

- Modify DSNZPARMs, for example to increase log buffers, increase or decrease the number of concurrent users, change lock escalation, and so on.

- Issue DISPLAY and ALTER BUFFERPOOL commands to review buffer pool usage characteristics, change buffer pool sizes, and increase or decrease buffer pool thresholds.

- Tune the DB2 Catalog, including dropping and freeing objects, executing MODIFY, reorganizing DB2 catalog table spaces and indexes, recovering the DB2 catalog indexes, building additional indexes on the DB2 catalog, tuning disk usage, and implementing data set shadowing.

- Perform DSNDB07 tuning.

To tune the DB2

database design

- Modify the logical and physical model.

- Modify and issue DDL.

- Execute ALTER statements.

- Ensure that proper parameters are specified.

- Implement table changes.

- Partition data into universal PBG table spaces.

- Spread non-partitioned objects over multiple devices using PIECESIZE.

- Add indexes.

- REORG tablespaces.

- REORG or RECOVER indexes.

- Consider or reconsider data compression.

- Denormalize the database design.

- Consider adding redundant tables.

To tune programs

- Perform SQL tuning.

- Tune the high-level language (such as COBOL or 4GL).

- Use a program restructuring tool.

- Run RUNSTATS.

- Execute EXPLAIN, modify your code, and REBIND.

- Examine the REOPT clause and whether it can help your specific code.

- Consider using SQL tweaks and/or hints.

- Use the OPTIMIZE FOR n ROWS clause.

- Consider activating query parallelism.

- Change locking strategies.

- Change the DB2 catalog statistics and REBIND.

- Use a testing tool to provide what if testing and tuning.

- Use a tool to sample the application's address space as it executes.

Of course, this is not an exhaustive list and should only be used

as a guideline for the

types

of tuning options available to you.

Hopefully this high-level overview of DB2 performance and various tuning options at your disposal has given you some food for thought as you tackle the task of keeping your DB2 environment operating smoothly and efficiently...

Monday, November 02, 2015

IBM Insight 2015 Wrap-Up

Last week I attended the IBM Insight conference and blogged

about the first few days of the conference here at http://db2portal.blogspot.com/2015/10/an-update-from-ibm-insight-2015.html…

and I promised to blog about the remainder of the conference, so here is a

synopsis of the highlights.

On Wednesday, the focus of the general session was on IBM’s

acquisition of The Weather Company’s technology. The deal calls for IBM to acquire The Weather

Company’s B2B, mobile and cloud-based web properties, including WSI, weather.com,

Weather Underground and The Weather Company brand. IBM will not be acquiring The

Weather Channel television station, which will license weather forecast data

and analytics from IBM under a long-term contract. IBM intends to utilize its newly

acquired weather data in its Watson platform.

On Wednesday, the focus of the general session was on IBM’s

acquisition of The Weather Company’s technology. The deal calls for IBM to acquire The Weather

Company’s B2B, mobile and cloud-based web properties, including WSI, weather.com,

Weather Underground and The Weather Company brand. IBM will not be acquiring The

Weather Channel television station, which will license weather forecast data

and analytics from IBM under a long-term contract. IBM intends to utilize its newly

acquired weather data in its Watson platform.

The deal is expected to close in the first quarter of 2016.

Terms were not disclosed.

You can read all about the acquisition in this IBM press release.

I spent some of my time at

Insight this year learning more about dashDB and it is a very interesting

technology. Marketed as data warehousing in the cloud, IBM touts four use cases

for dashDB: standalone cloud data warehouse, as a store for data scientists, for

those implementing a hybrid data warehouse, and for NoSQL analysis and rapid

prototyping.

IBM promotes simplicity,

performance, analytics on both traditional and NoSQL, and polyglot language

support as the most important highlights of dashDB. And because it has DB2 BLU

under the covers IBM dashDB not only super-compresses data, but it can operate

on that data without necessarily decompressing it.

Additionally, a big theme of

the conference was in-memory technology, and dashDB sports CPU cache

capabilities. In fact, I heard several folks at the conference say some

variation of “RAM is too slow”… meaning that CPU cache is faster and IBM is moving

in that direction.

The bottom line for dashDB is

that it offers built-in high availability and workload management capabilities,

along with being in-memory optimized and scalable. Worth a look for folks

needing a powerful data warehousing platform.

For you DB2 for z/OS folks, IDAA

was a big theme of this year’s Insight conference. The latest version, V5.1,

adds advanced analytics capabilities and in database transformation, making

your mainframe queries that can take advantage of the accelerator faster than

ever.

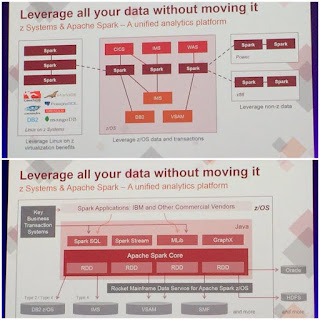

Apache Spark was another

pervasive topic this year. It was talked about in multiple sessions and I even

had the opportunity to play with it in a hands-on lab. The big news for z folks

is that IBM is bringing out a version of Spark for the mainframe that will run

on z/OS – it is already supported on zLinux.

Apache Spark was another

pervasive topic this year. It was talked about in multiple sessions and I even

had the opportunity to play with it in a hands-on lab. The big news for z folks

is that IBM is bringing out a version of Spark for the mainframe that will run

on z/OS – it is already supported on zLinux.

Of course, I attended a whole

slew of DB2 sessions including SQL coding, performance and administration

presentations. Some of the highlights include DB2 11 for LUW being announced, several discussions about dark data, and a lot of information about IBM's Big SQL and how it can be used to rapidly and efficiently access Hadoop (and other unstructured) data using SQL.

I live-tweeted a bunch of highlights of those sessions, too. Indeed, too many to include here, if you are

interested in catching everything I have to say about a conference, keep

reading these blog posts, of course, but you should really follow me on

Twitter, too at http://twitter.com/craigmullins

I also had the honor of delivering a presentation at this year's conference on the changes and trends going on in the world of DB2 for z/OS. Thanks to the 70 or so people who attended my session - I hope you all enjoyed it and learned something, too!

As usual, and well-you-know if you've ever attended this conference before, there was also a LOT of walking to be done. From the hotel to the conference center to the expo hall to lunch to the conference center. But at least there were some signs making light of the situation this year!

As usual, and well-you-know if you've ever attended this conference before, there was also a LOT of walking to be done. From the hotel to the conference center to the expo hall to lunch to the conference center. But at least there were some signs making light of the situation this year!

There was a lot of fun to be

had at the conference, too. The vendor exhibition hall was stocked with many

vendors, big and small, and it seems like they all had candy. I guess that’s

what you get when the conference is so close to Halloween! The annual Z party

at the House of Blues (for which you need a Z pin to get in – this year’s pin

was orange) was a blast and the Maroon 5 concert courtesy of Rocket Software

was a lot of fun, too.

If you are looking for a week

of database, big data, and analytics knowledge transfer, the opportunity to

chat and connect with your peers, as well as some night-time entertainment, be

sure to plan to attend next year’s IBM Insight conference (October 23 thru 27,

2016 at the Mandalay Bay in Las Vegas).

Monday, October 26, 2015

An Update from IBM Insight 2015

The

annual IBM Insight conference is being held this week in Las Vegas, as usual at

the Mandalay Bay Resort and Conference Center. And I have the good fortunate to

be in attendance.

If you

follow me on Twitter (http://www.twitter.com/craigmullins)

I am live tweeting many of the sessions I am attending at the conference. But

for those of you who are not following along on Twitter, or just prefer a

summary, here’s a quick overview of Monday’s highlights.

The

day kicked off with the general session keynote which was delivered by a

combination of Jake Porway (of DataKind), IBMers and IBM customers. The theme of

the keynote was analytics and cognitive computing. The emphasis, in my opinion,

of the event has kind of shifted from the data to what is being done with the

data… in other words, the applications. And that is interesting, because the

data is there to support the applications, right? That said, I’m a data bigot

from way back, so it was a bit app-heavy for me.

That

said, there were some insightful moments delivered during the keynote. Bob

Picciano, Senior VP of IBM Analytics, kicked things off by informing the

audience that true insight comes from exploiting

existing data, dark data, and IoT data with agility driven by the cloud. That’s

a lot of buzzwords, but it makes sense! And then executives from Whirlpool,

Coca-Cola, and Twitter were brought out to talk about how they derive insight

from their data and systems.

Perhaps

the most interesting portion of the general session was the Watson discussion,

led by Mike Rhodin, Senior VP, IBM Watson. We learned more about cognitive

systems and how they get more valuable over time as they learn, which makes

them unique in the world of computers and IT. IBM shared that there are more

than 50 core technologies used by IBM Watson to deliver its cognitive computing

capabilities. It was exciting to learn about systems that reason, never stop learning and drive more value

over time.

Additional apps

were discussed that let us learn about the various ways to choose wine, that

nobody starts thinking about ice cream early in the week and that when the

weather changes women buy warmer clothes; men buy beer and chips. You kind of had to be

there, I guess!

Of

course, this was only the first session of a long day. Additional highlights of

the day included a high-level overview of the recently announced (but not yet

released) DB2 12 for z/OS, the features that should be in a next generation

database, and Gartner’s take on the state of big data and analytics. Let’s

briefly address each of these one by one.

Firstly,

DB2 12, which will be available in the ESP (early support program) in March

2016. There are a lot of nice new features that will be available in this new

version. We’ll see a lot more in-memory capabilities which will speed up

queries and processing. Buffer pools can be up to 16 TBs, even though today’s z

systems can support only 10 TBs – IBM is planning for the future with that one!

And we’ll

continue to see the blurring of the lines between static and dynamic SQL. How?

Well, we’ll get RLF for static SQL and plan stability for dynamic SQL in DB2 12.

IBM claims that we’ll be able to achieve up to 360

million txns/hour with DB2 12 for z/OS using a RESTful web API. Pretty

impressive.

And there will

be no skip-level migration for DB2 12... You have to migrate thru DB2 11 to get

to 12.

OK,

what about the features that should be in a next generation database? According

to IBM a next gen DBMS should:

- Deliver advanced in-memory technology

- Be fast for both transactional and analytic workloads

- Provide scalable performance

- Be available, reliable, resilient, and secure

- Be simple, intelligent and agile

- And be easy to deploy and cloud-ready

Sounds

about right to me!

And

finally, let’s briefly take a look at the some of the Gartner observations on

big data and analytics. The Gartner presentation was delivered by Donald

Feinberg, long-time Gartner analyst on the topic of data and database systems. First

of all, Feinberg rejects the term “big data” saying there is no such thing. It

is all just data. He went on to claim that “big data” is perhaps the most ambiguous

term out there, but it is also the most searched term at

Gartner!

Feinberg

also rejected the term data lake, saying “There is no such thing as a data

lake, it is just a place to dump data.” He warned that it will come back to bite organizations in a few

years if they do not take the time to manage, transform, secure, etc. the data

in the lake, turning it into a data reservoir, instead.

He

also made the very interesting observation that BI/analytics was the number 1

priority for CIOs on Gartner’s annual CIO priorities survey and that it has

been in that slot for 8 years running. But if analytics

was really that much of a priority why haven't they gotten it done yet?

Of course, a lot more

happened at IBM Insight today – and many more things were discussed. But I don’t

want this blog post to become too unwieldy, so that is all I’m going to cover

for now.

I’ll try to cover more later in the week as the conference

progresses.

Labels:

analytics,

BI,

data lakwe,

DB2 12,

Watson

Subscribe to:

Comments (Atom)