I had the good fortune to attend the North American IDUG Db2 Tech Conference in Atlanta, Georgia the week of June 8 through June 12, 2025, and as usual, the conference was phenomenal. If you are a Db2 developer, DBA, consultant, or user of any type there was a ton of content being shared. And there were many opportunities to mingle with peers to discuss and share your Db2 tips, tricks, and experiences. And that is probably the most beneficial part of the whole IDUG experience.

I’ve been going to IDUG conferences for a long time now. I’ve

been to them all except the very first one. And yes, IDUG has changed a lot over

the years, but it is still the preeminent user experience for Db2 professionals…

that is, it is not to be missed!

So there I was on Monday morning, one of the many attendees filing into the opening session expectantly...

I took a seat among the crowd... and the first key takeaway from the event for me is that there are more new attendees going to IDUG than ever before. It was stated at the opening session that about 10 percent of attendees were first-timers. That is great news because the more new people exposed to IDUG the better! Even better, it was announced that there are over 16,000 IDUG members now.

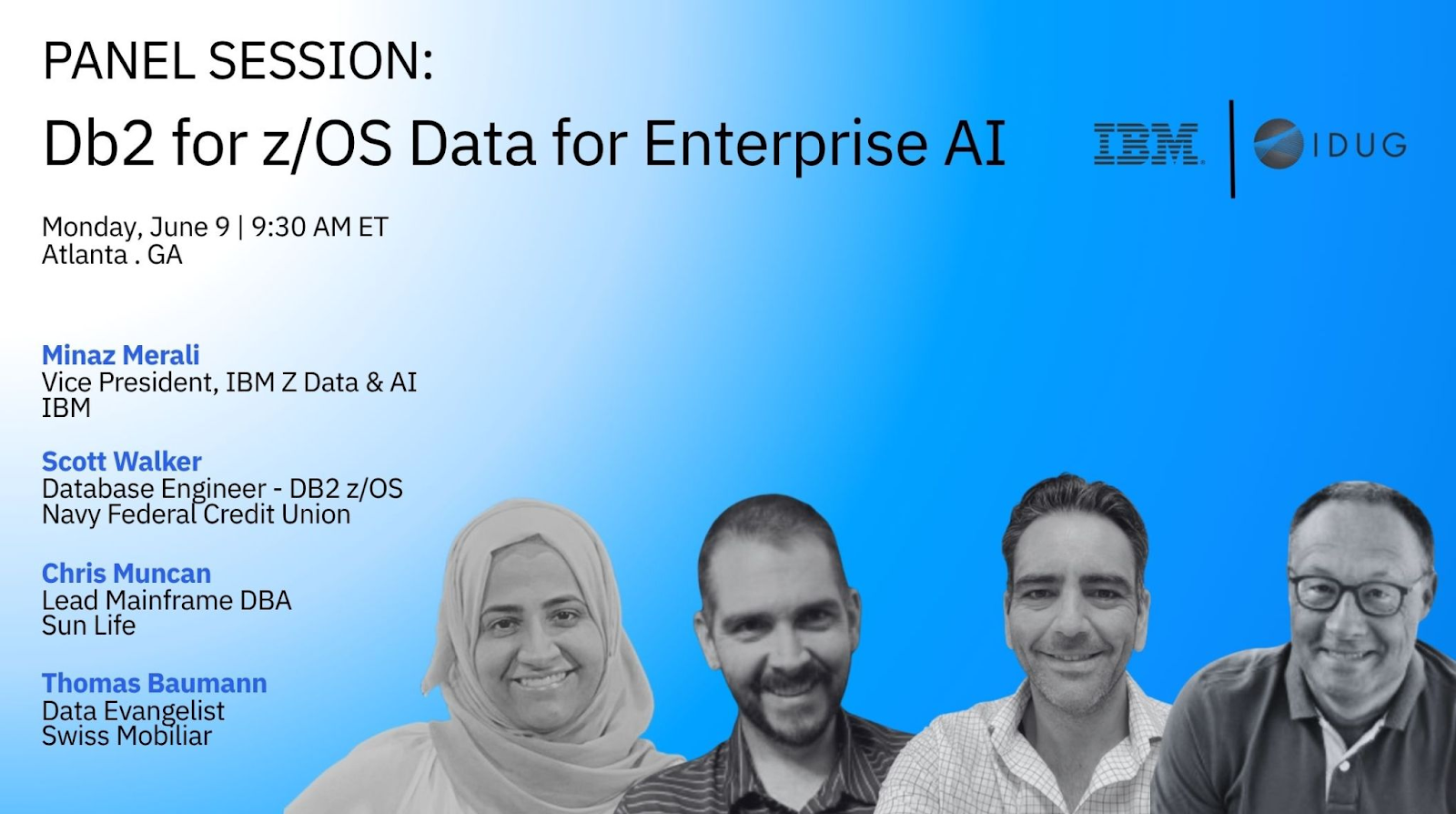

The first keynote session, on Monday, was sponsored by IBM

and it was titled Leveraging your Db2 Data for Enterprise AI. The keynote

featured Minaz Merali, Vice President, IBM Z Data & AI and Priya

Srinivasan, Vice President, IBM Core Software Products, Software Support &

SRE. And yes, this session was heavy into IBM’s AI strategy, which is

appropriate as AI is the driving force of IT these days. Indeed, it was said

that IBM is branding itself as the hybrid cloud and AI company!

Another interesting tidbit from the keynote is that "Today only 1% of enterprise data is currently being leveraged by GenAI." So, we've still go a long way to go! Also, 90% of enterprise data is unstructured, which requires a completely different way of processing and analyzing than traditional, structured data.

The speakers also identified four primary ways to scale AI with Db2 across the enterprise: application building, human productivity, performance, and integration.

And it sure felt good to hear IBMers promoting Db2 loudly for all to hear. It sometimes feels like Db2 is a forgotten jewel that IBM doesn't promote as much as they should. But it does not feel that way at IDUG. The keynote speakers hammered home the point that IBM Db2 powers the modern economy!

The top ten largest

banks, insurance, and auto companies all rely on Db2! And 70 percent of the world's transactions run on the IBM Z mainframe.

But perhaps my favorite comment of the IBM keynote session was made by a user, Chris Muncan (Sr. Mainframe Db2 DBA at Sun Life), who was participating as part of a user panel. He called “legacy” systems “legendary” instead! I think I'm going to use that.

As an aside, I started feeling old as I listened to people talking about 15 or 20 years

of experience and realizing that is still post-2000! I sometimes still think of 20 years ago as being in the 1980s!

I also delivered two presentations myself at IDUG. The first one was Monday, right after lunch, titled "Index Modernization in Db2 for z/OS." The general thrust of the presentation is that technology is evolving, and Db2 has changed a lot. As such, the same indexing strategies that worked well 10 or 20 or more years ago are no longer optimal. The presentation started with a brief review of the critical role of indexes and their history in Db2 for z/OS. Then I covered the many innovations IBM has applied to indexes in Db2 for z/OS over the past few releases including things like index compression, Fast Traverse Blocks (FTBs), and index features like include columns and indexing on expressions. Here I am talking about one of the newer index features, FTBs:

I also reviewed some of the many other changes that could impact indexing strategy including changing data patterns, analytics and IDAA, data growth, and the surge in dynamic SQL. Then I took at look at ways to examine your current index deployment and looking for ways to modernize and improve indexing at your shop.

And two lucky winners walked away with a copy of my book, A Guide to Db2 Performance for Application Developers.

Day two opened with a keynote session from Greg Lotko of Broadcom - a fun session tying local "treasures" of Atlanta to Broadcom's Db2 solutions.

- The great Women in Technology keynote session from day three "Harnessing the Power of Adaptability, Innovation, and Resilience" delivered by Jennifer Pharr Davis.

- The Db2 for z/OS Spotlight session where Haakon Roberts illuminated everybody on highlights of how to prepare for the future of Db2 for z/OS.

- And the Db2 for z/OS Experts Panel - which is always a highlight of the event for me - where a body of IBM and industry luminaries take questions about Db2 for z/OS from the attendees.

Finally, there were nightly events hosted by the vendoes but the only one I attended this year was the IBM outing held at the Georgia Aquarium. The aquarium is one of the largest in the world and it contains some very large aquatic beasties including whale sharks, beluga whales, manta rays, and more. Here are some photos: